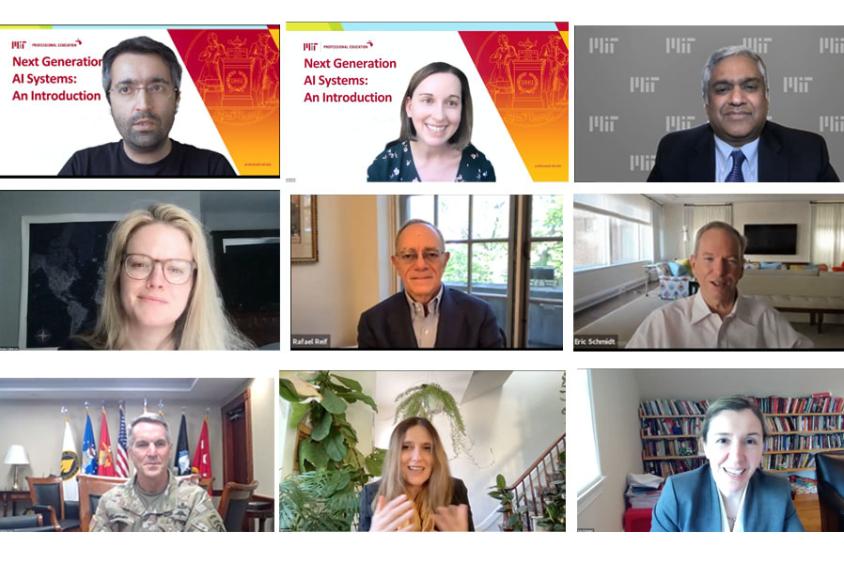

A unique collaboration with US Special Operations Command

An online course developed in collaboration with USSOCOM, the MIT School of Engineering, and MIT Professional Education featured speakers on artificial intelligence applications and implications. Top row, left to right: Sertac Karaman, Julie Shah, and Anantha Chandrakasan. Middle row, left to right: Stephanie Culberson, L. Rafael Reif, and Eric Schmidt. Bottom row, left to right: General Richard Clarke, Regina Barzilay, and Asu Ozdaglar.

When General Richard D. Clarke, commander of the U.S. Special Operations Command (USSOCOM), visited MIT in fall 2019, he had artificial intelligence on the mind. As the commander of a military organization tasked with advancing U.S. policy objectives as well as predicting and mitigating future security threats, he knew that the acceleration and proliferation of artificial intelligence technologies worldwide would change the landscape on which USSOCOM would have to act.

Clarke met with Anantha P. Chandrakasan, dean of the School of Engineering and the Vannevar Bush Professor of Electrical Engineering and Computer Science, and after touring multiple labs both agreed that MIT — as a hub for AI innovation — would be an ideal institution to help USSOCOM rise to the challenge. Thus, a new collaboration between the MIT School of Engineering, MIT Professional Education, and USSOCOM was born: a six-week AI and machine learning crash course designed for special operations personnel.

“There has been tremendous growth in the fields of computing and artificial intelligence over the past few years,” says Chandrakasan. “It was an honor to craft this course in collaboration with U.S. Special Operations Command and MIT Professional Education, and to convene experts from across the spectrum of engineering and science disciplines, to present the full power of artificial intelligence to course participants.”

In speaking to course participants, Clarke underscored his view that the nature of threats, and how U.S. Special Operations defends against them, will be fundamentally affected by AI. “This includes, perhaps most profoundly, potential game-changing impacts to how we can see the environment, make decisions, execute mission command, and operate in information-space and cyberspace.”

Due to the ubiquitous applications of AI and machine learning, the course was taught by MIT faculty as well as military and industry representatives from across many disciplines, including electrical and mechanical engineering, computer science, brain and cognitive science, aeronautics and astronautics, and economics.

“We assembled a lineup of people who we believe are some of the top leaders in the field,” says faculty co-organizer of the USSOCOM course and associate professor in the Department of Aeronautics and Astronautics at MIT, Sertac Karaman. “All of them are able to come in and contribute a unique perspective. This was just meant to be an introduction … but there was still a lot to cover.”

The potential applications of AI, spanning civilian and military uses, are diverse, and include advances in areas like restorative and regenerative medical care, cyber resiliency, natural language processing, computer vision, and autonomous robotics.

A fireside chat with MIT President L. Rafael Reif and Eric Schmidt, co-founder of Schmidt Futures and former chair and CEO of Google, who is also an MIT innovation fellow, painted a particularly vivid picture of the way that AI will inform future conflicts.

“It’s quite obvious that the cyber wars of the future will be largely AI-driven,” Schmidt told course participants. “In other words, they’ll be very vicious and they’ll be over in about 1 millisecond.”

However, the capabilities of AI represented only one aspect of the course. The faculty also emphasized the ethical, social, and logistical issues inherent in the implementation of AI.

“People don't know, actually, [that] some existing technology is quite fragile. It can make mistakes,” says Karaman. “And in the Department of Defense domain, that could be extremely damaging to their mission.”

AI is vulnerable to both intentional tampering and attacks as well as mistakes caused by programming and data oversights. For instance, images can be intentionally distorted in ways that are imperceptible to humans, but will mislead AI. In another example, a programmer could “train” AI to navigate traffic under ideal conditions, only to have the program malfunction in an area where traffic signs have been vandalized.

Asu Ozdaglar, the MathWorks Professor of Electrical Engineering and Computer Science, head of the Department of Electrical Engineering and Computer Science, and deputy dean of academics in the MIT Schwarzman College of Computing, told course participants that researchers must find ways to incorporate context and semantic information into AI models prior to “training,” so that they “don’t run into these issues which are very counterintuitive from our perspective … as humans.”

Read the full article on MIT News.