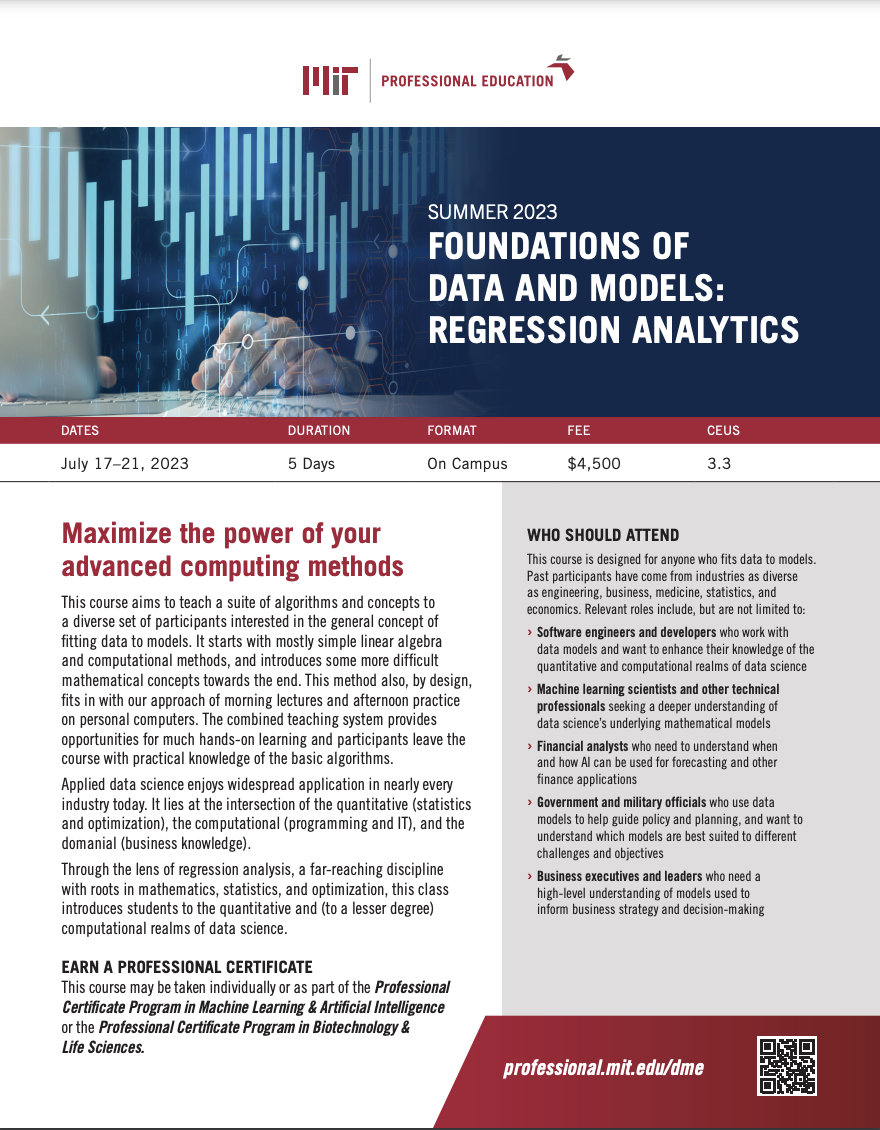

This course aims to teach a suite of algorithms and concepts to a diverse set of participants interested in the general concept of fitting data to models. It starts with mostly simple linear algebra and computational methods, and introduces some more difficult mathematical concepts towards the end. This method also, by design, fits in with our approach of morning lectures and afternoon practice on personal computers. The combined teaching system provides opportunities for much hands-on learning and participants leave the course with practical knowledge of the basic algorithms.

Applied data science enjoys widespread application in nearly every industry today. It lies at the intersection of the quantitative (statistics and optimization), the computational (programming and IT), and the domanial (business knowledge).

Unfortunately, Data Science Education presently seems to overemphasize nonparametric methods, like Artificial Intelligence and Machine Learning (more on this below), perhaps because such methods seem irresistibly powerful and arcane.

AI and ML certainly can be powerful, but only the practitioner with a firm grasp of the fundamentals of data and models can leverage such methods with a sure hand, knowing when and how to use them and, importantly, when not to.

The present class is such a foundational course in data and models. Through the lens of regression analysis, a far-reaching discipline with roots in mathematics, statistics, and optimization, Foundations of Data and Models introduces students to the quantitative and (to a lesser degree) computational realms of data science.

One way to unpack the field of data and models is to bisect it into two general categories:

Parametric Methods

In these approaches, a reasonable mathematical model of the system under consideration is proposed, and the “regression task” is to determine the parameters of that mathematical model as uniquely and as accurately as possible. Typical methods for doing so include Least Squares, Simulated Annealing, Genetic Algorithms, Quasi-Random and Grid Search, etc.

Nonparametric Methods

Here no mathematical model is assumed, though this doesn’t mean a mathematical model is not used. Rather, the mathematical model is usually baked into the method and is sufficiently general that it can mimic a wide variety of possibly very nonlinear behaviors arising from the system under consideration. Typical methods are the Principal Components, Neural Networks or Deep Learning, Artificial Intelligence (AI), Machine Learning (ML), etc.

There are pros and cons to each method.

Foundations of Data and Models is a course in parametric methods of regression, which provides the student an intuitive understanding of the quantitative principles needed to excel when their data science journey arrives at AI and ML.

The course covers such topics as Statistics, Least Squares, Bacchus-Gilbert Methods, Simple Random, and Grid Search algorithms, Annealing and Genetic Algorithms, Errors in Nonlinear Regression, Solving Large Systems, Robust Regression with Regularization, Neural Networks and an Introduction to Artificial Intelligence.

We strongly suggest that students take this (or a similar) course before embarking into the world of Artificial Intelligence and Machine Learning.

COVID-19 Updates: MIT Professional Education fully expects to hold courses on-campus during the Summer of 2023 (only a handful will be delivered in the live virtual or hybrid modality). In the event there is a change in MIT's COVID-19 policies and a course cannot be held on-campus, we will deliver courses via live virtual format. Find the latest information here.

Certificate of Completion from MIT Professional Education